| < Day Day Up > |

This section presents Strassen's remarkable recursive algorithm for multiplying n × n matrices, which runs in Θ(nlg 7) = O(n2.81) time. For sufficiently large values of n, therefore, it outperforms the naive Θ(n3) matrix-multiplication algorithm MATRIX-MULTIPLY from Section 25.1.

Strassen's algorithm can be viewed as an application of a familiar design technique: divide and conquer. Suppose we wish to compute the product C = AB, where each of A, B, and C are n × n matrices. Assuming that n is an exact power of 2, we divide each of A, B, and C into four n/2 × n/2 matrices, rewriting the equation C = AB as follows:

(Exercise 28.2-2 deals with the situation in which n is not an exact power of 2.) Equation (28.8) corresponds to the four equations

| (28.10) |

| (28.11) |

| (28.12) |

Each of these four equations specifies two multiplications of n/2 × n/2 matrices and the addition of their n/2 × n/2 products. Using these equations to define a straightforward divide-and-conquer strategy, we derive the following recurrence for the time T (n) to multiply two n × n matrices:

Unfortunately, recurrence (28.13) has the solution T(n) = Θ(n3), and thus this method is no faster than the ordinary one.

Strassen discovered a different recursive approach that requires only 7 recursive multiplications of n/2 × n/2 matrices and Θ(n2) scalar additions and subtractions, yielding the recurrence

Strassen's method has four steps:

Divide the input matrices A and B into n/2 × n/2 submatrices, as in equation (28.8).

Using Θ(n2) scalar additions and subtractions, compute 14 matrices A1, B1, A2, B2, . . . , A7, B7, each of which is n/2 × n/2.

Recursively compute the seven matrix products Pi = Ai Bi for i = 1, 2, . . . , 7.

Compute the desired submatrices r, s, t, u of the result matrix C by adding and/or subtracting various combinations of the Pi matrices, using only Θ(n2) scalar additions and subtractions.

Such a procedure satisfies the recurrence (28.14). All that we have to do now is fill in the missing details.

It is not clear exactly how Strassen discovered the submatrix products that are the key to making his algorithm work. Here, we reconstruct one plausible discovery method.

Let us guess that each matrix product Pi can be written in the form

where the coefficients αij, βij are all drawn from the set {-1, 0, 1}. That is, we guess that each product is computed by adding or subtracting some of the submatrices of A, adding or subtracting some of the submatrices of B, and then multiplying the two results together. While more general strategies are possible, this simple one turns out to work.

If we form all of our products in this manner, then we can use this method recursively without assuming commutativity of multiplication, since each product has all of the A submatrices on the left and all of the B submatrices on the right. This property is essential for the recursive application of this method, since matrix multiplication is not commutative.

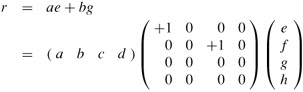

For convenience, we shall use 4 × 4 matrices to represent linear combinations of products of submatrices, where each product combines one submatrix of A with one submatrix of B as in equation (28.15). For example, we can rewrite equation (28.9) as

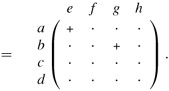

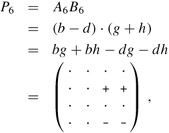

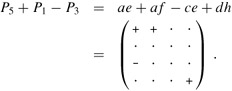

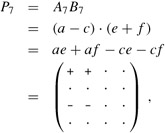

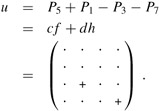

The last expression uses an abbreviated notation in which "+" represents +1, "·" represents 0, and "-" represents -1. (From here on, we omit the row and column labels.) Using this notation, we have the following equations for the other submatrices of the result matrix C:

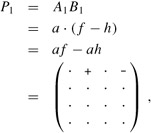

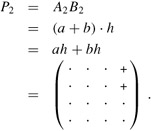

We begin our search for a faster matrix-multiplication algorithm by observing that the submatrix s can be computed as s = P1 + P2, where P1 and P2 are computed using one matrix multiplication each:

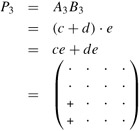

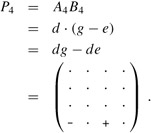

The matrix t can be computed in a similar manner as t = P3 + P4, where

and

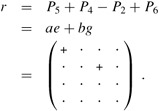

Let us define an essential term to be one of the eight terms appearing on the right-hand side of one of the equations (28.9)-(28.12). We have now used 4 products to compute the two submatrices s and t whose essential terms are af, bh, ce, and dg. Note that P1 computes the essential term af, P2 computes the essential term bh, P3 computes the essential term ce, and P4 computes the essential term dg. Thus, it remains for us to compute the remaining two submatrices r and u, whose essential terms are ae, bg, cf, and dh, without using more than 3 additional products. We now try the innovation P5 in order to compute two essential terms at once:

|

P5 |

= |

A5B5 |

|

= |

(a + d) · (e + h) |

|

|

= |

ae + ah + de + dh |

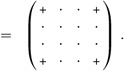

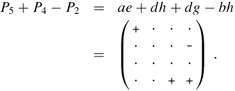

In addition to computing both of the essential terms ae and dh, P5 computes the inessential terms ah and de, which need to be canceled somehow. We can use P4 and P2 to cancel them, but two other inessential terms then appear:

By adding an additional product

however, we obtain

We can obtain u in a similar manner from P5 by using P1 and P3 to move the inessential terms of P5 in a different direction:

By subtracting an additional product

we now obtain

The 7 submatrix products P1, P2, . . . , P7 can thus be used to compute the product C = AB, which completes the description of Strassen's method.

From a practical point of view, Strassen's algorithm is often not the method of choice for matrix multiplication, for four reasons:

The constant factor hidden in the running time of Strassen's algorithm is larger than the constant factor in the naive Θ(n3) method.

When the matrices are sparse, methods tailored for sparse matrices are faster.

Strassen's algorithm is not quite as numerically stable as the naive method.

The submatrices formed at the levels of recursion consume space.

The latter two reasons were mitigated around 1990. Higham [145] demonstrated that the difference in numerical stability had been overemphasized; although Strassen's algorithm is too numerically unstable for some applications, it is within acceptable limits for others. Bailey et al. [30] discuss techniques for reducing the memory requirements for Strassen's algorithm.

In practice, fast matrix-multiplication implementations for dense matrices use Strassen's algorithm for matrix sizes above a "crossover point," and they switch to the naive method once the subproblem size reduces to below the crossover point. The exact value of the crossover point is highly system dependent. Analyses that count operations but ignore effects from caches and pipelining have produced crossover points as low as n = 8 (by Higham [145]) or n = 12 (by Huss-Lederman et al. [163]). Empirical measurements typically yield higher crossover points, with some as low as n = 20 or so. For any given system, it is usually straightforward to determine the crossover point by experimentation.

By using advanced techniques beyond the scope of this text, one can in fact multiply n × n matrices in better than Θ(nlg 7) time. The current best upper bound is approximately O(n2.376). The best lower bound known is just the obvious Ω(n2) bound (obvious because we have to fill in n2 elements of the product matrix). Thus, we currently do not know exactly how hard matrix multiplication really is.

How would you modify Strassen's algorithm to multiply n × n matrices in which n is not an exact power of 2? Show that the resulting algorithm runs in time Θ(nlg 7).

What is the largest k such that if you can multiply 3 × 3 matrices using k multiplications (not assuming commutativity of multiplication), then you can multiply n × n matrices in time o(nlg 7)? What would the running time of this algorithm be?

V. Pan has discovered a way of multiplying 68 × 68 matrices using 132,464 multiplications, a way of multiplying 70 × 70 matrices using 143,640 multiplications, and a way of multiplying 72 × 72 matrices using 155,424 multiplications. Which method yields the best asymptotic running time when used in a divide-and-conquer matrix-multiplication algorithm? How does it compare to Strassen's algorithm?

| < Day Day Up > |